🚀 Testing technologies for large-scale data analysis#

I was reading a very interesting benchmark that compares DuckDB, SQLite and Pandas working with over 1 million rows.

The focus: speed, memory usage, and efficiency in common analysis tasks (aggregations, filters, group-by).

🔍 Highlights#

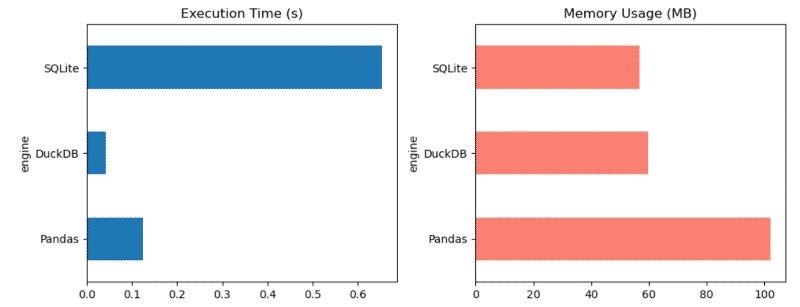

- 🦆 DuckDB: fast, consistent, and very balanced in memory usage.

- 🐼 Pandas: sometimes the fastest, but can consume a lot of RAM.

- 🧱 SQLite: simple and stable, although the slowest of the group.

🧩 In short#

If you’re just getting started in data analysis:

- Pandas is like a super-flexible calculator inside Python. Great for exploring data.

- DuckDB works like an SQL engine on your laptop, optimized for fast queries without needing a server.

- SQLite is a lightweight database that stores data in a file and supports SQL queries, but it’s not as optimized for heavy analysis.

In summary:

👉 For fast local analysis, DuckDB is gaining ground.

👉 Pandas remains the standard for data manipulation in Python.

👉 SQLite is useful, but not the most efficient for large volumes.

More information at the link 👇

Also published on LinkedIn.